Understanding and Computing the Hilbert Regularity

| 🛈 |

|---|

| I originally wrote this post when working at AS Discrete Mathematics as part of a project sponsored by the Ethereum Foundation. It is reproduced here with friendly permission. |

When attacking AOCs using Gröbner bases, the most relevant question is: how complex is the Gröbner basis computation? One commonly used estimation is based on the degree of regularity. Intuitively, the degree of regularity is the degree of the highest-degree polynomials to appear during the Gröbner basis computation. (Whether this metric is good for estimating the AOC's security is a different matter.)

Unfortunately, different authors define the term “degree of regularity” differently. In this post, I use the understanding of Bardet et al. [1,2], which coincides with the well-defined Hilbert regularity.

I first introduce the required concepts, and then make them more tangible with some examples. Lastly, there is some sagemath code with which the Hilbert regularity can be computed.

Definition of the Hilbert Regularity

Let be some field, a polynomial ring in variables over , and a polynomial ideal of .

The affine Hilbert function of quotient ring is defined as For some large enough value , the Hilbert function of all can be expressed as a polynomial in . For a general treatment of the how? and why?, have a look at the excellent book “Ideals, Varieties, and Algorithms,” [3] in particular Chapter 9, §2, Theorem 6, and Chapter 9, §3. The examples in this post hopefully shed some light, too. This polynomial, denoted , is called Hilbert polynomial. By definition, the values of the Hilbert function and the Hilbert polynomial coincide for values greater than .

The Hilbert regularity is the smallest such that for all , the evaluation of the Hilbert function in equals the evaluation of the Hilbert polynomial in

By the rank-nullity theorem, we can equivalently write the Hilbert function as This is a little bit easier to handle, because we can look at and separately and can ignore the quotient ring for the moment. By augmenting with a graded monomial order, like degrevlex, we can go one step further and look at leading monomials only: the set is a basis for as an -vector space. See [3, Chapter 9, §3, Proposition 4] for a full proof. Meaning we don't even need to look at , but can restrict ourselves to the ideal of leading monomials .

One way to get a good grip on is through reduced Gröbner bases. A Gröbner basis for ideal is a finite set of polynomials with the property and, more relevant right now, This means it's sufficient to look at (the right combinations) of elements of This, in turn, is more manageable because always has finitely many elements, but might not.

Example: 0-Dimensional Ideal

Let's start with a super simple polynomial system: for some finite field This is a zero-dimensional, monomial (thus homogeneous) ideal. That's about as special as a special case can get. Note that here, we have , but this doesn't generally hold. Dealing with a super-special case also means that the Hilbert polynomial is relatively boring, but that's fine for starting out. is the reduced Gröbner basis for , and we'll use its elements to help computing the Hilbert function.

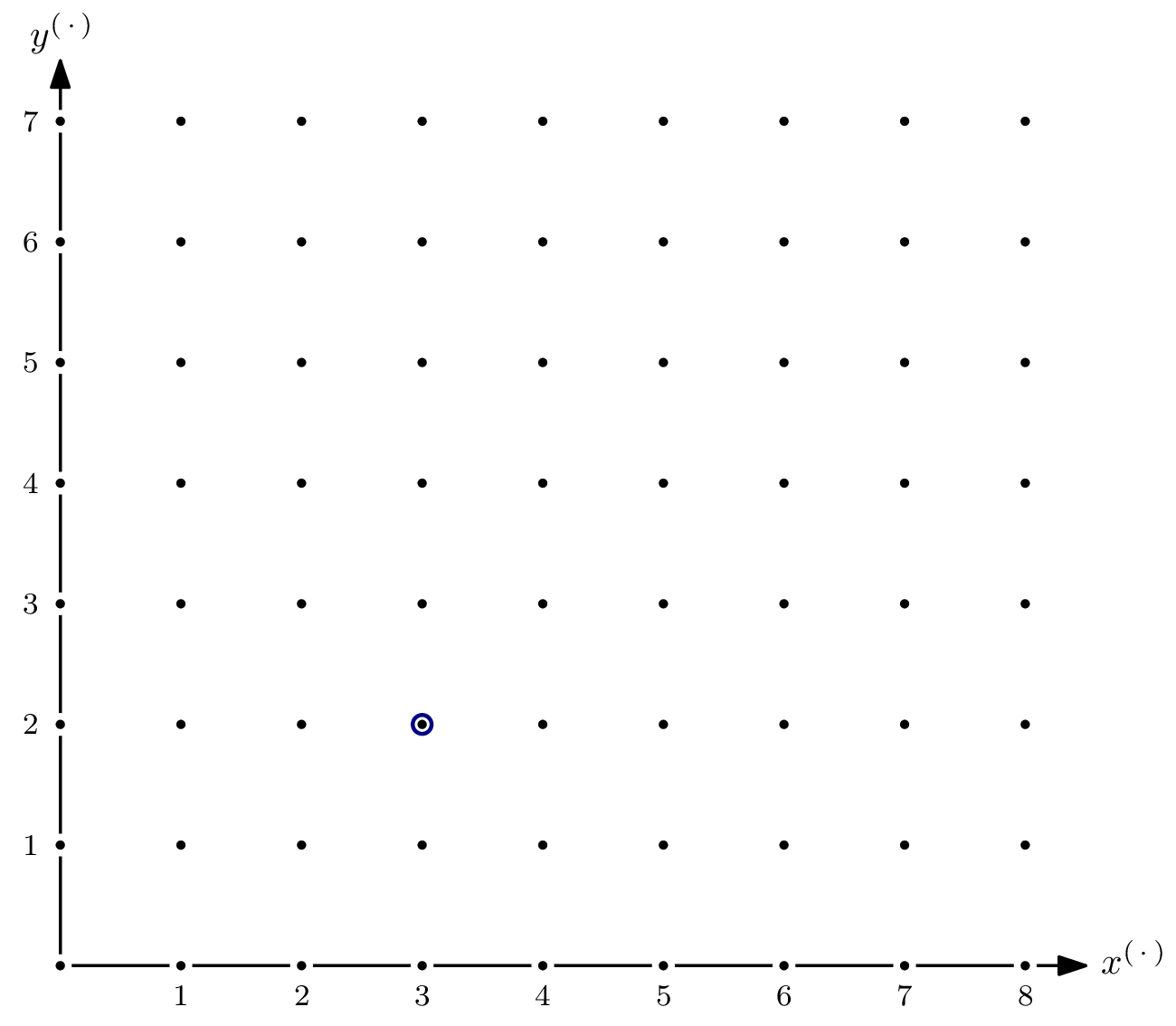

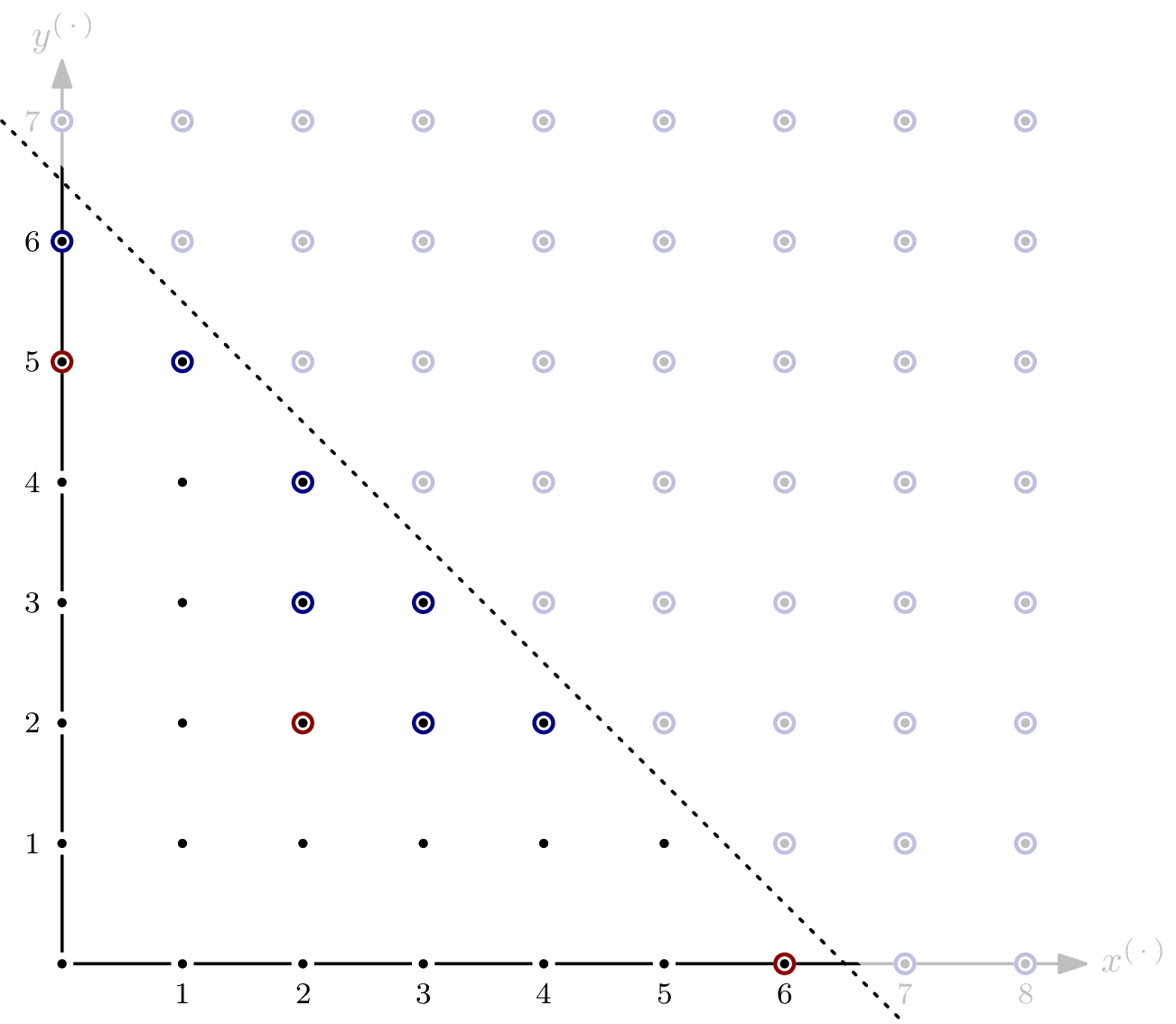

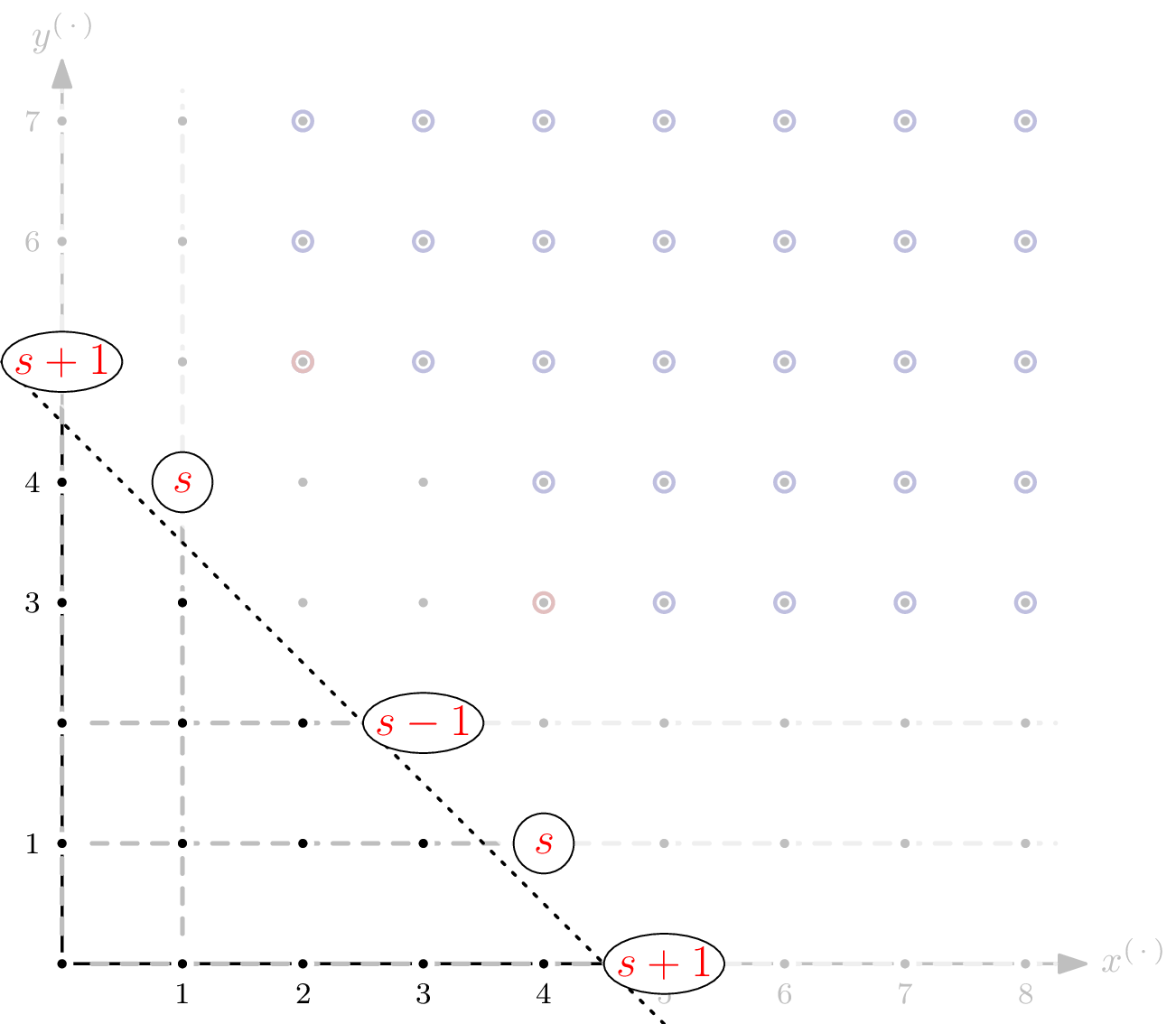

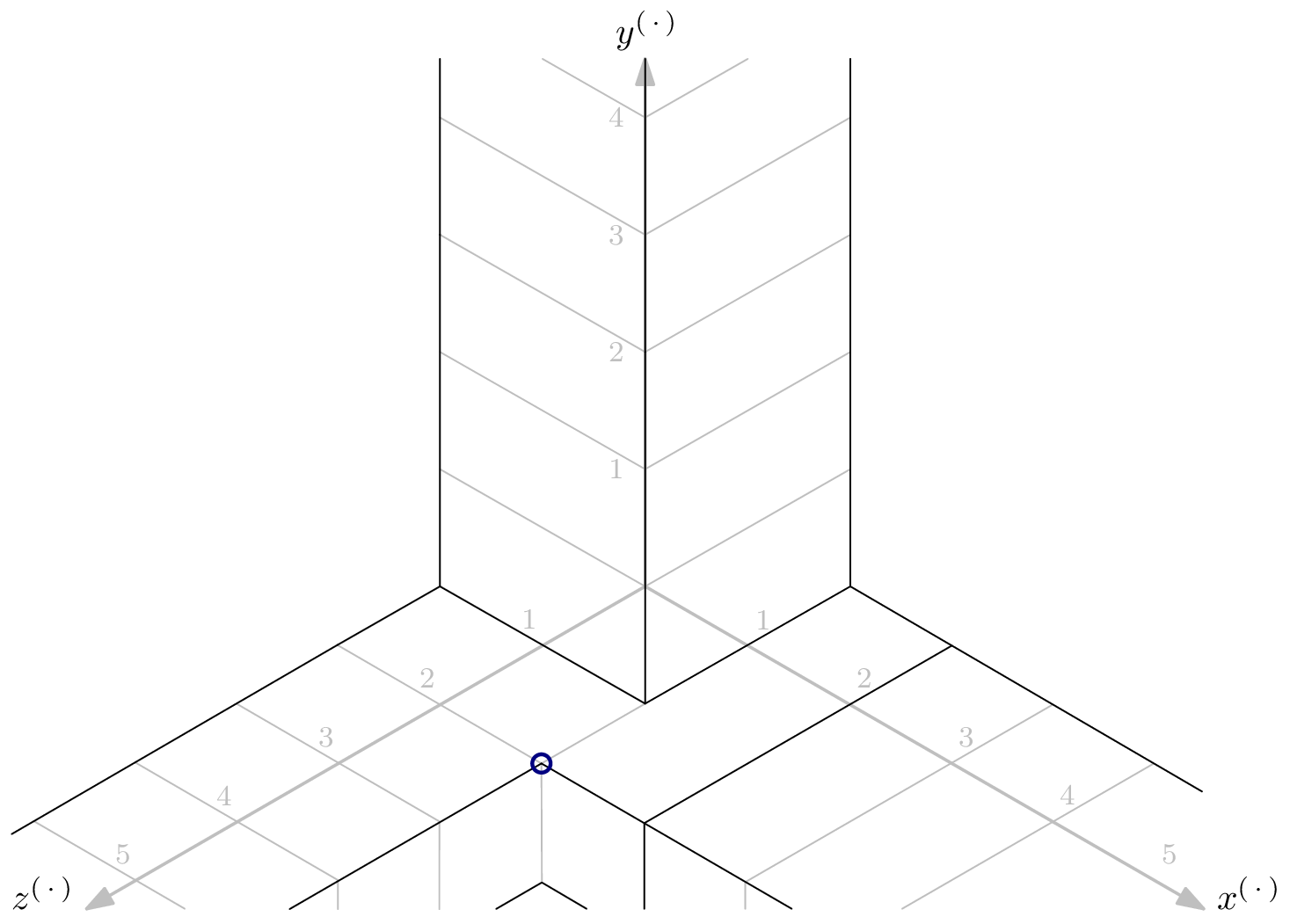

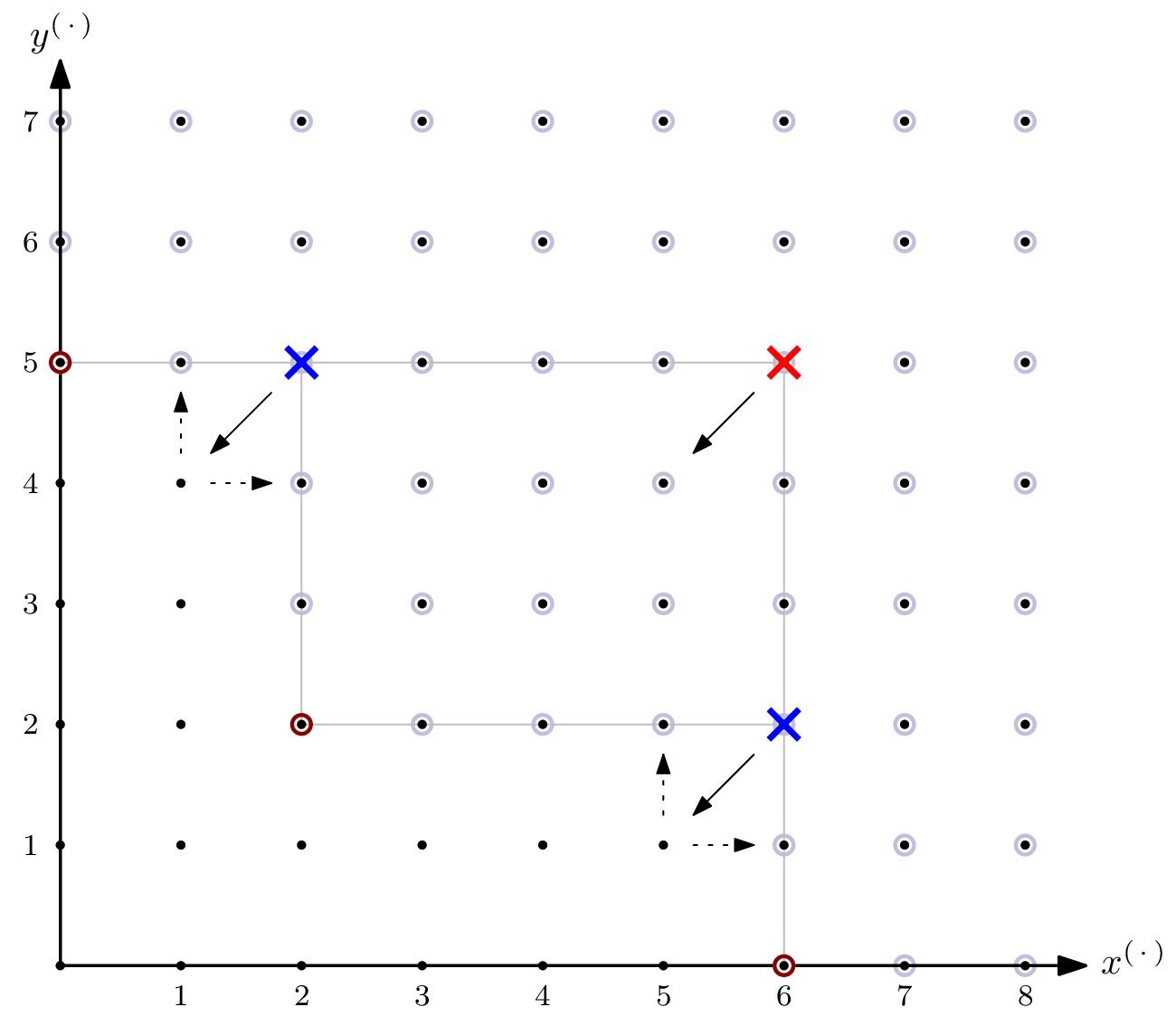

A benefit of ideals in two variables is: we can draw pictures.

This is what the monomials of as an -vector space look like. Well, at least the part After all, as an -vector space has infinite dimension. I have (arbitrarily) highlighted i.e., coordinate (3,2), to give a better understanding of what the picture means.

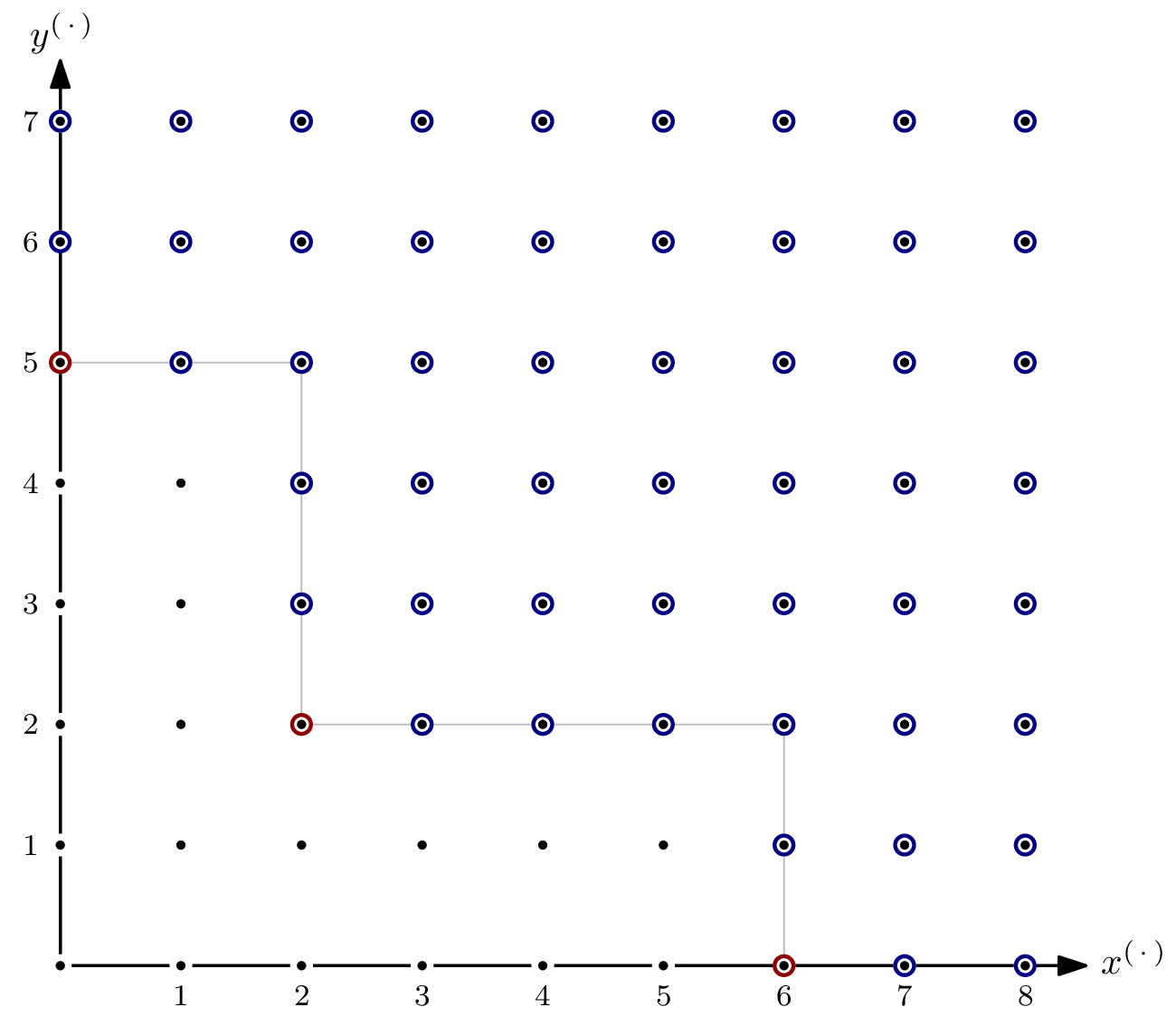

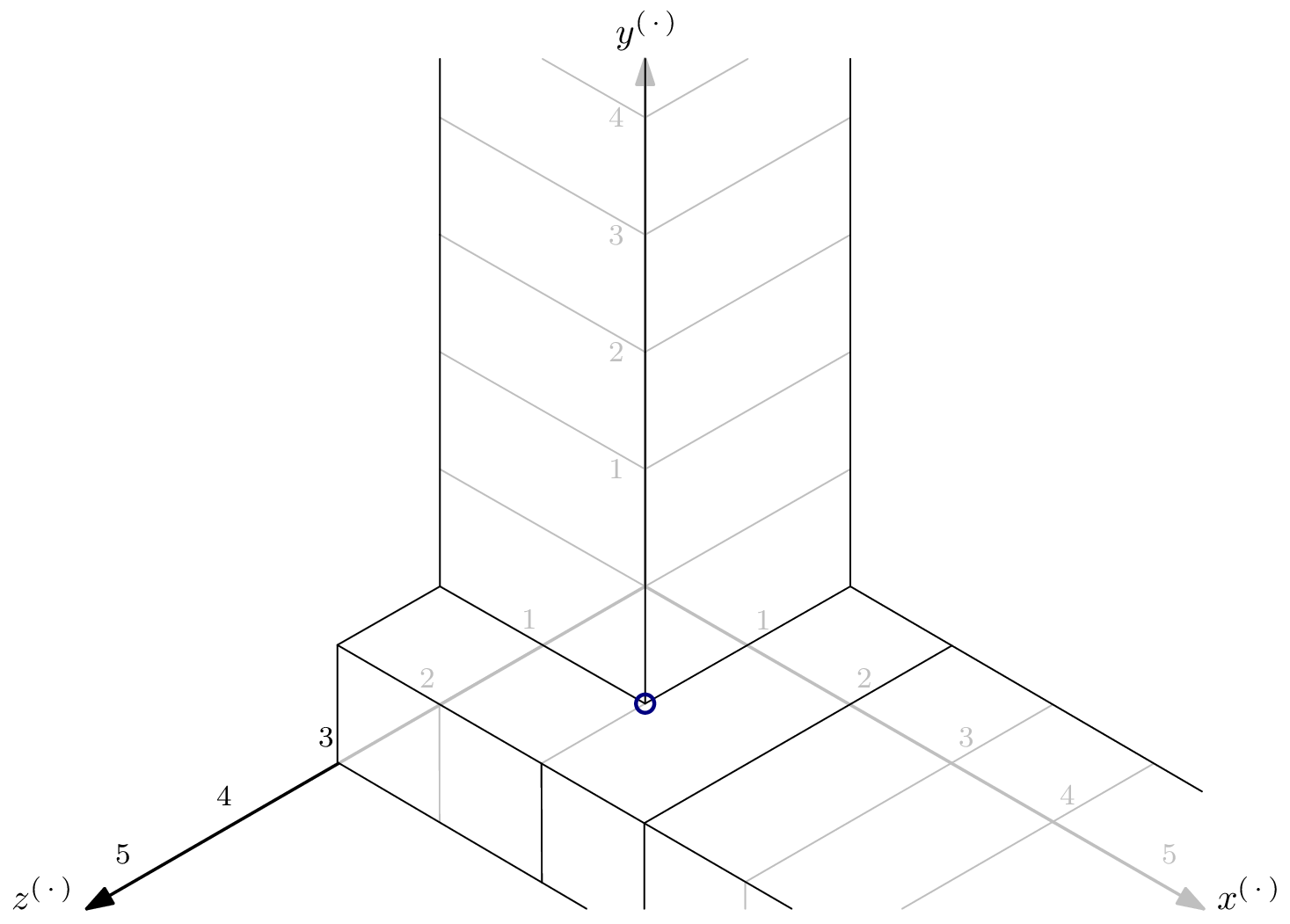

Since is a monomial ideal, we can highlight every element in . The circles of the elements of the Gröbner basis are red. The zig-zig pattern of the boundary between and is inherent, and generalizes to higher dimensions, i.e., more variables. Because of the zig-zagging, the set of monomials not in is referred to as staircase of

Let's start computing the Hilbert function for The -vector space dimensions of and are simply the number of monomials in respectively with degree Getting those numbers is easy – it amounts to counting dots in the picture! For example, for , we have

No monomial of total degree less than or equal to 2 is in , so computing the Hilbert function is a little bit boring here.

The value of the Hilbert function is more interesting: some monomials of degree are indeed elements of , and thus is not 0 but 4. In particular, we have For we have

From this point forward, increasing will not change the value of the Hilbert function – the dimension of as an -vector space grows with the same rate as the dimension of , since all monomials not in are of lesser total degree. Expressed differently, all monomials above the line are elements of both and – the values of the Hilbert function doesn't change by increasing

From this, two things follow:

- The Hilbert polynomial of is the constant 18. (That's why I said it's relatively boring. A more interesting case follows.)

- The Hilbert regularity of is 6, since for all

Example: Ideal of Positive Dimension

As hinted at above, whether or not is a monomial ideal does not matter for computing the Hilbert function or the Hilbert polynomial, because behaves exactly the same. What does matter, though, is the dimension of In the previous example, was of dimension 0, and the Hilbert polynomial of was a constant. That's not a coincidence.

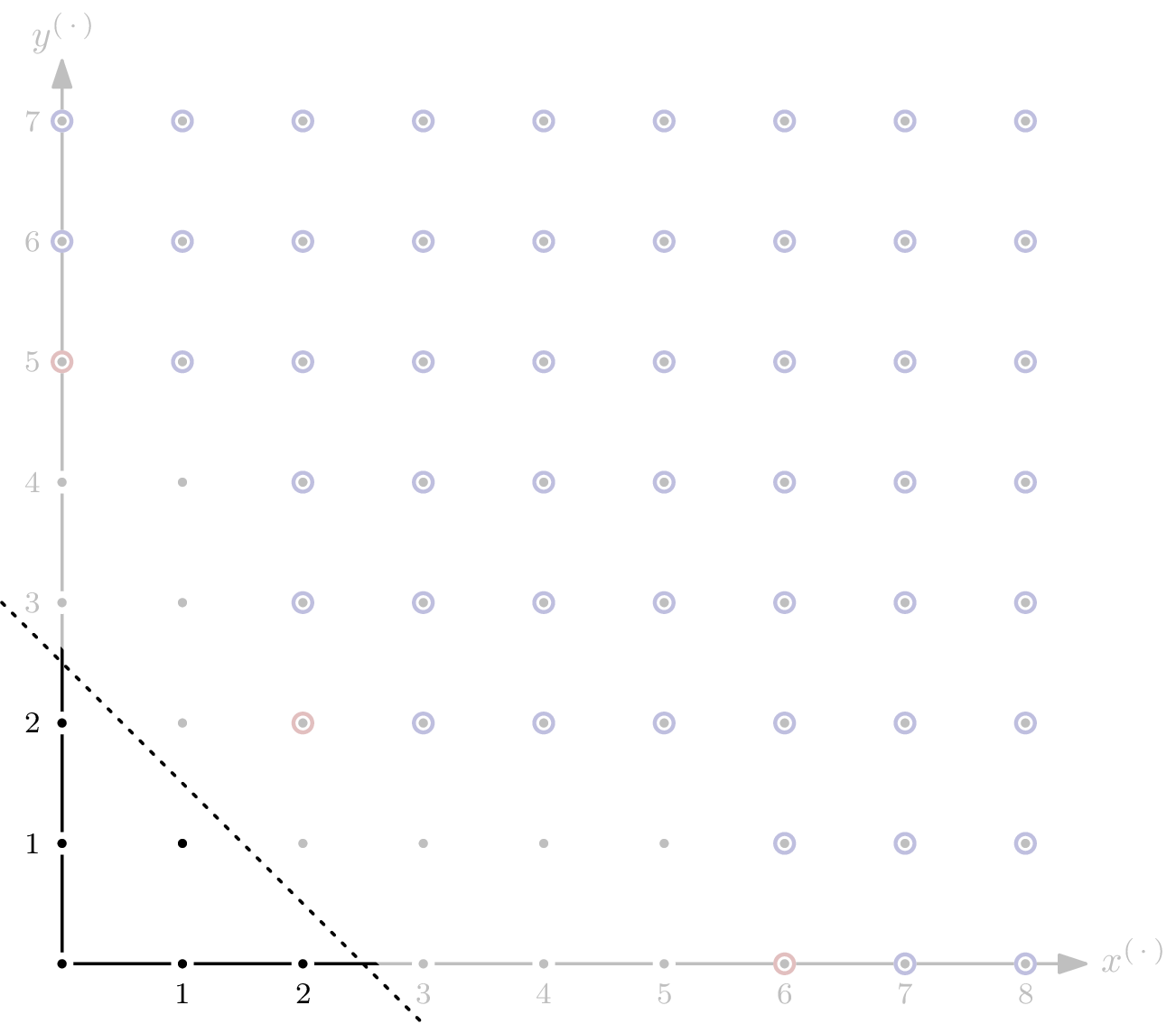

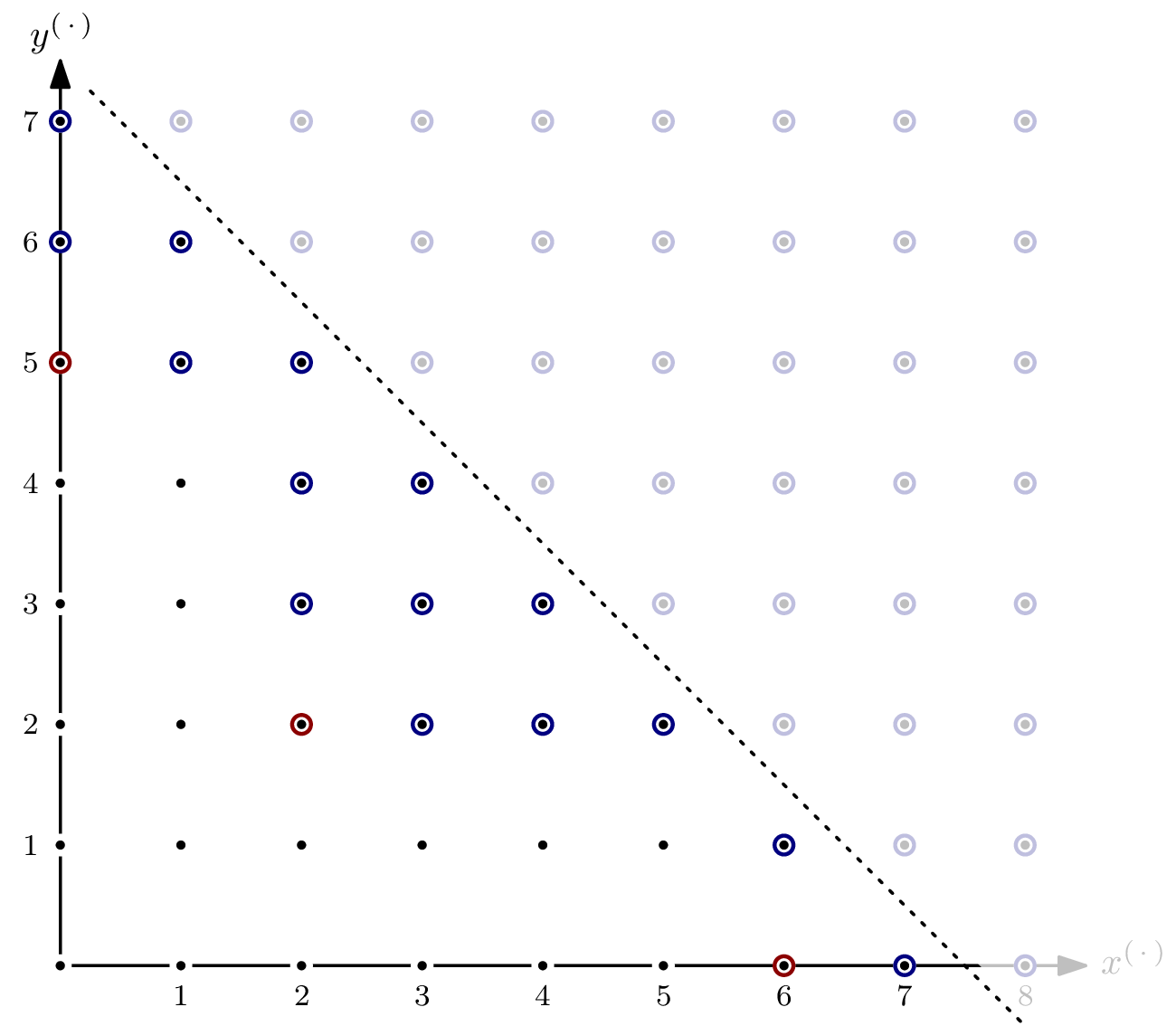

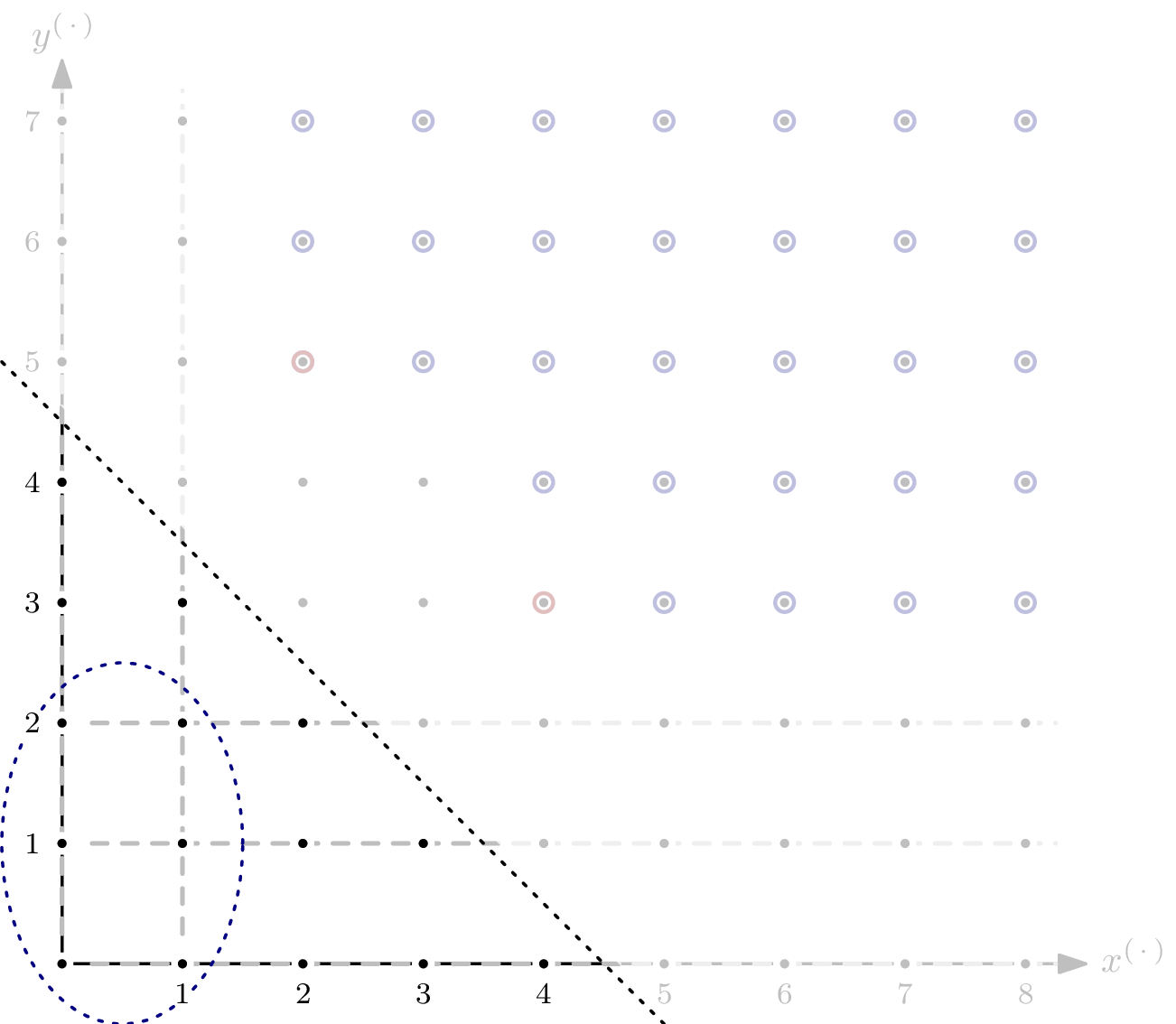

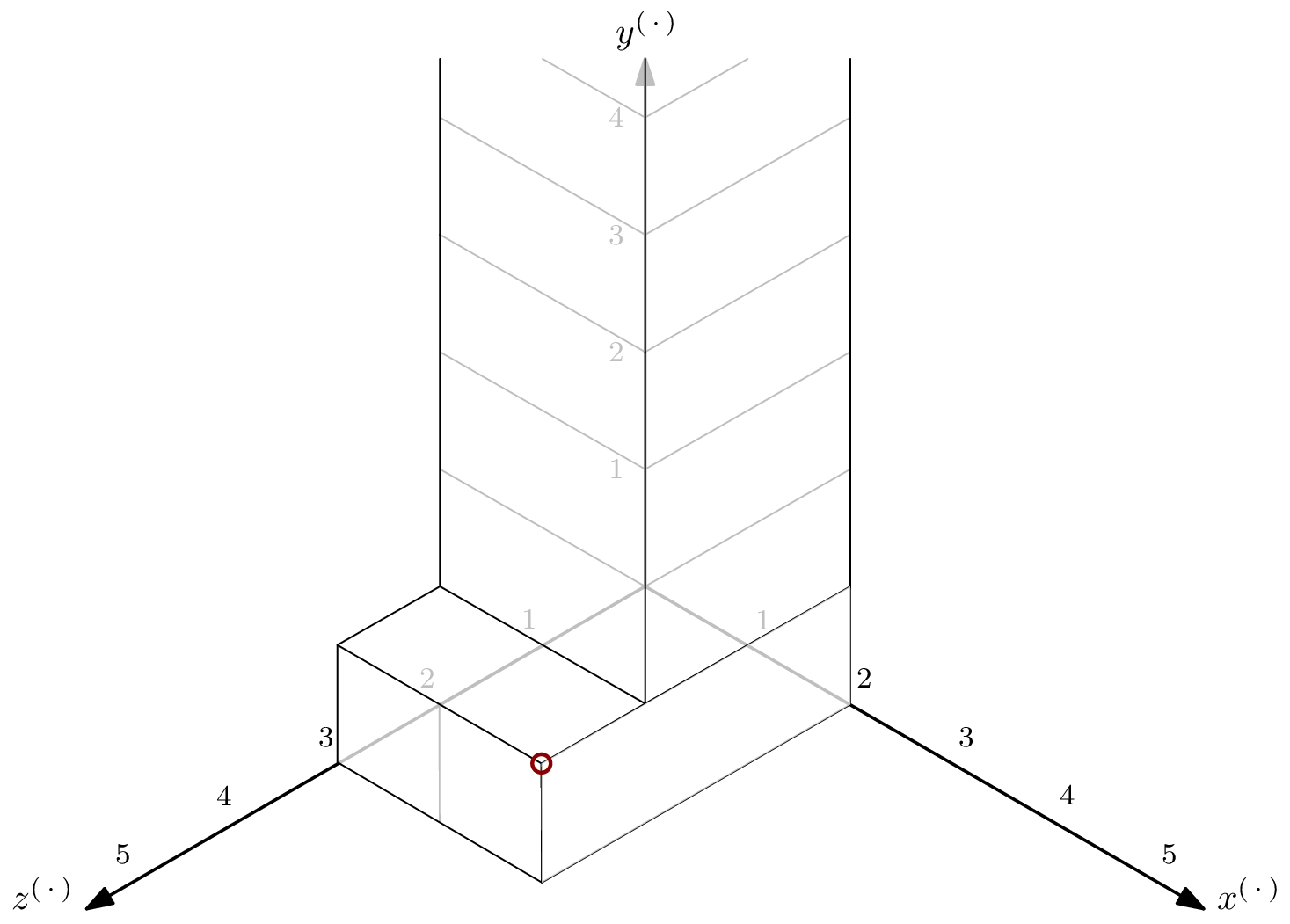

Even though the ideal spanned by the polynomial system modelling an AOC will usually be zero-dimensional, it's interesting to see what happens if it isn't. Let's take and

As you can see, there are parts of the staircase that extend to infinity. That's a direct consequence of having positive dimension, or, equivalently, variety not having finitely many solutions. In the picture below, I've indicated the staircase's five subspaces of dimension by dashed, gray lines.

For the Hilbert function, only monomials in of degree are relevant. For each of the five subspaces, we can express the matching number of elements as a polynomial in

The sum of these five polynomials is which corresponds to the total number of monomials in the staircase of of degree that lie in the staircase's 1-dimensional subspaces – except that some elements are counted more than once. Since the intersection of two orthogonal 1-dimensional subspaces is of dimension , we can simply add a constant correction term. For ideals with more than two variables, we generally need to add correction terms of higher dimension, corresponding to polynomial summands of degree higher than 0.

Adding the correction term of gives as a (preliminary) Hilbert polynomial for We're not completely done yet: for , there are monomials not in that are also not in any of the 1-dimensional subspaces – for example Of those, we only have finitely many. In the example, it's 4.

After adding the second correction term, we have .

By finding the Hilbert polynomial, we also computed the Hilbert regularity of it's In other words, for we have

This coincides with the distance of the closest diagonal Generally speaking, the diagonal is a hyperplane. such that all “overlapping” as well as all zero-dimensional parts of the staircase are enclosed – the red and blue dashed circles, respectively, in above picture.

More Variables, More Dimensions

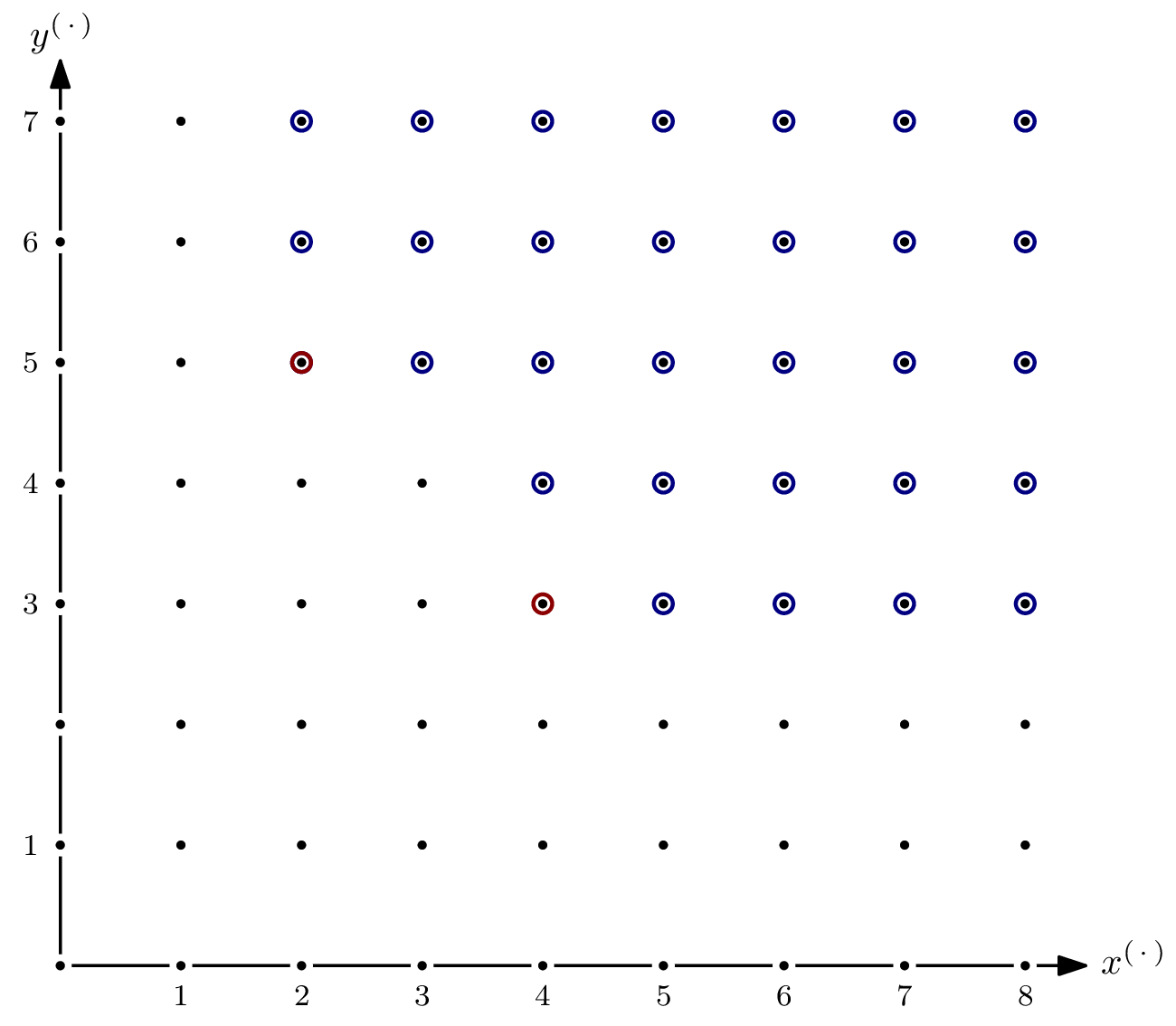

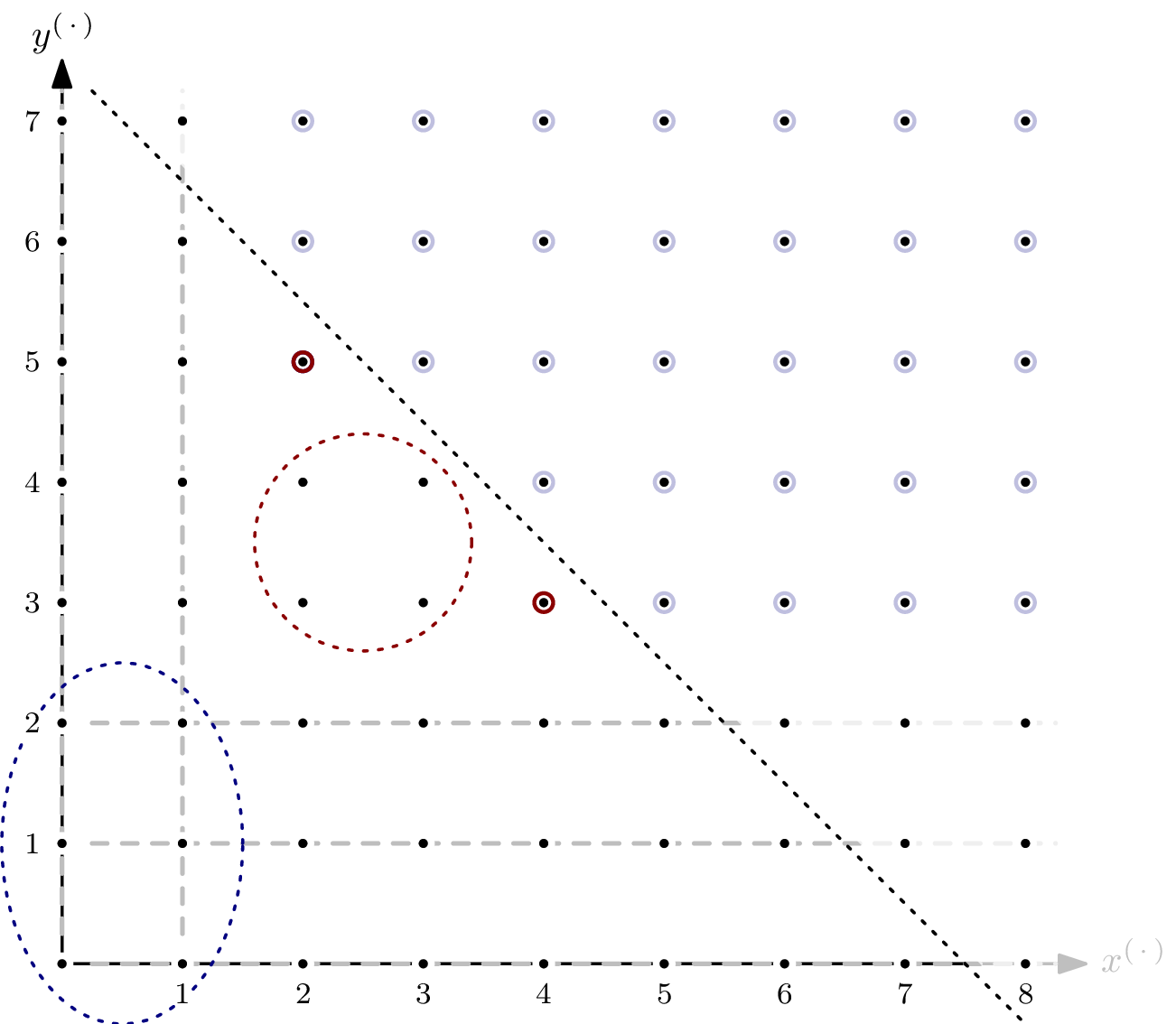

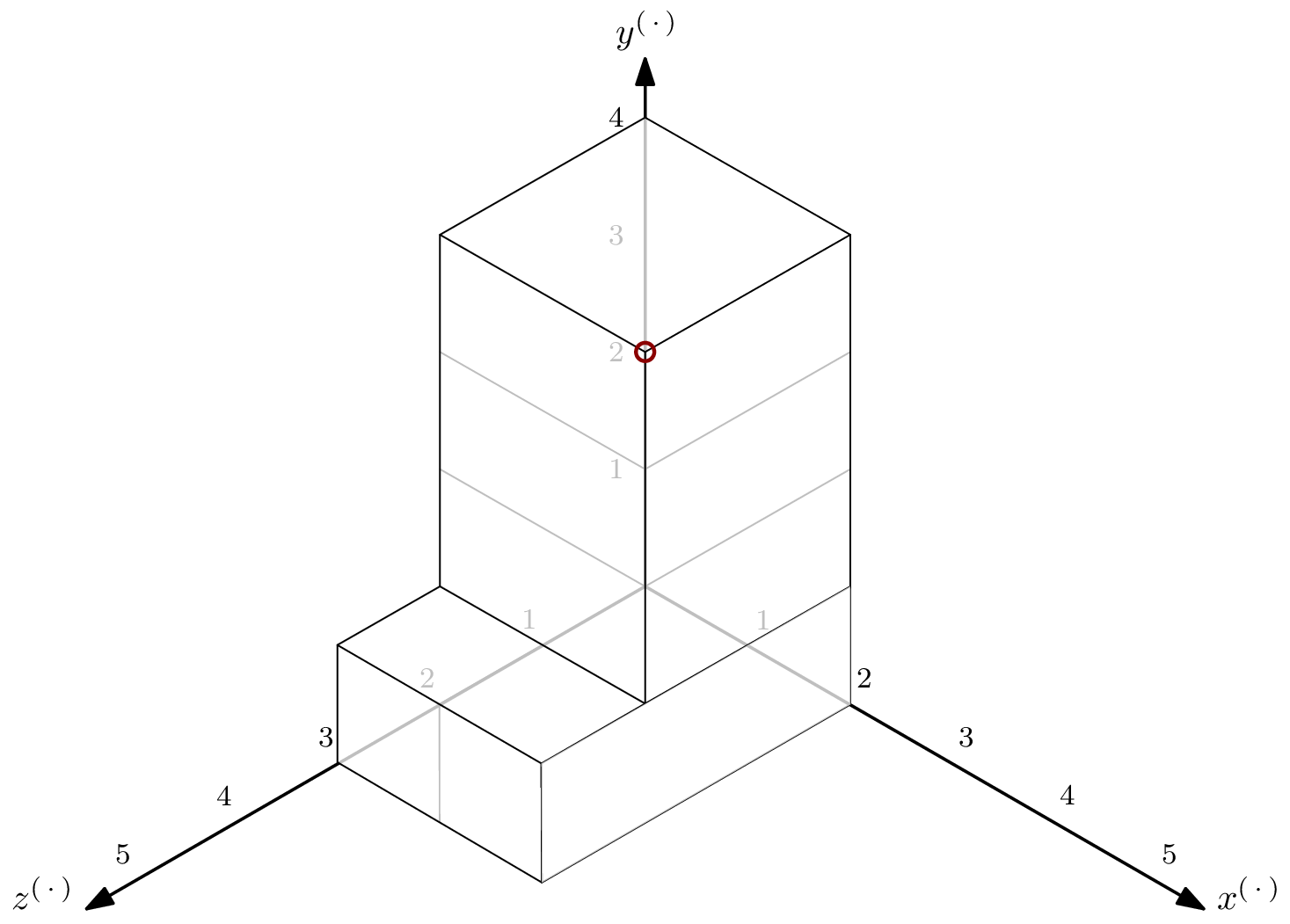

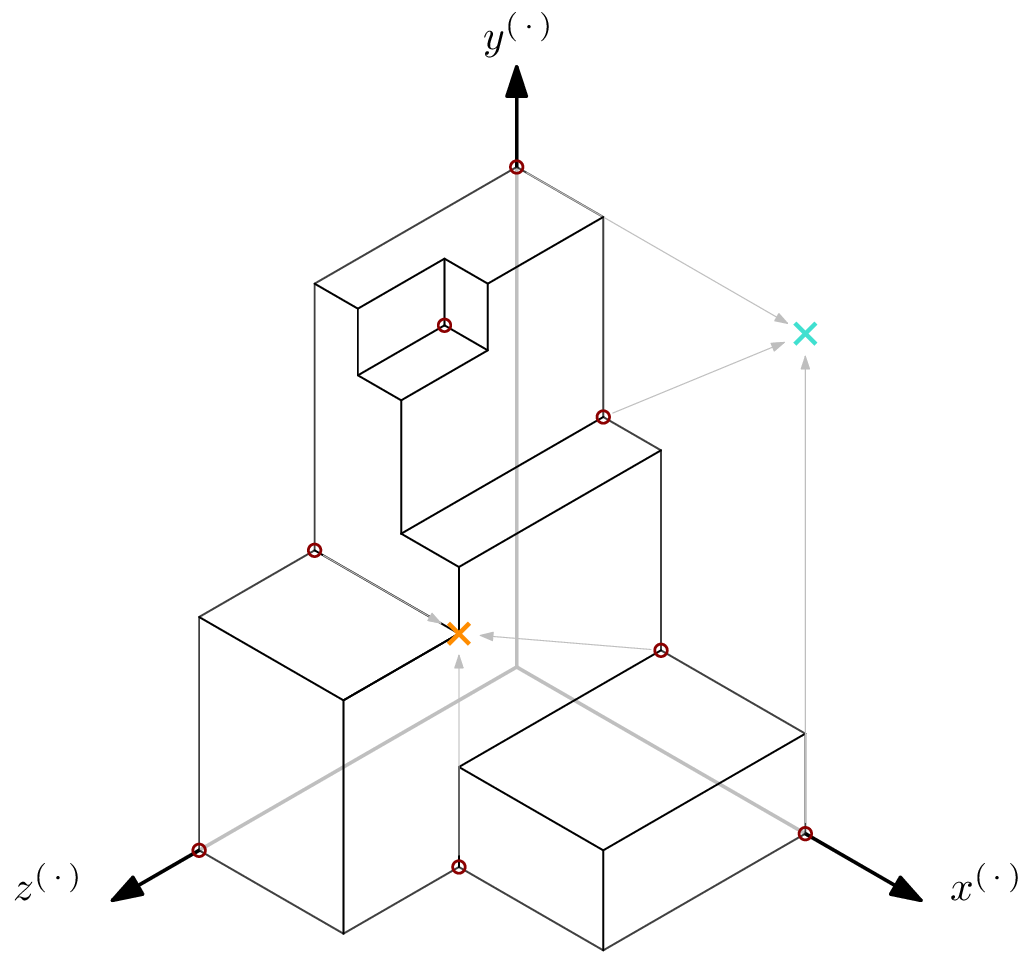

The intuition of the 2-dimensional examples above translate to higher dimensions: find the most distant corner of the blue circle – the parts where positive-dimensional subspaces of the variety overlap – and the red circle – the variety's part of dimension zero – and take the distance of the farther of these two corners as the Hilbert regularity. However, finding the corners becomes less trivial. Let me demonstrate with a staircase consisting of three “tunnels” that we'll successively modify.

Above staircase is defined by No monomials exist in the red bubble – every point is part of a subspace of dimension 1. The blue corner is the monomial defining the enclosing space of the parts where positive-dimensional subspaces overlap. It coincides with the least common multiple (lcm) of the three elements of namely The Hilbert regularity can be read off from : the hyperplane's required distance is

That was easy enough. Let's take a look at The staircase looks similar, with the exception for the “ -tunnel.”

Even though from above is still on the “border” of just as it was for it no longer defines the enclosing space we're looking for. Note that the lcm of the elements in is still but the Hilbert regularity is now defined by the lcm of only two elements, and giving The Hilbert regularity has changed to

Let's modify the staircase a little bit more, and look at

he Hilbert regularity can once again be found by looking at but the reason has changed. This time around, is the most distant corner of the volume enclosing all monomials not appearing in positive-dimensional subspaces of the variety – that's the red bubble from before. And since only one “tunnel” remains, there's no more overlap in positive-dimensional subspaces – the blue bubble, and with it the blue dot, have disappeared. Note that is once again the lcm of the three elements of The Hilbert regularity is now again 3.

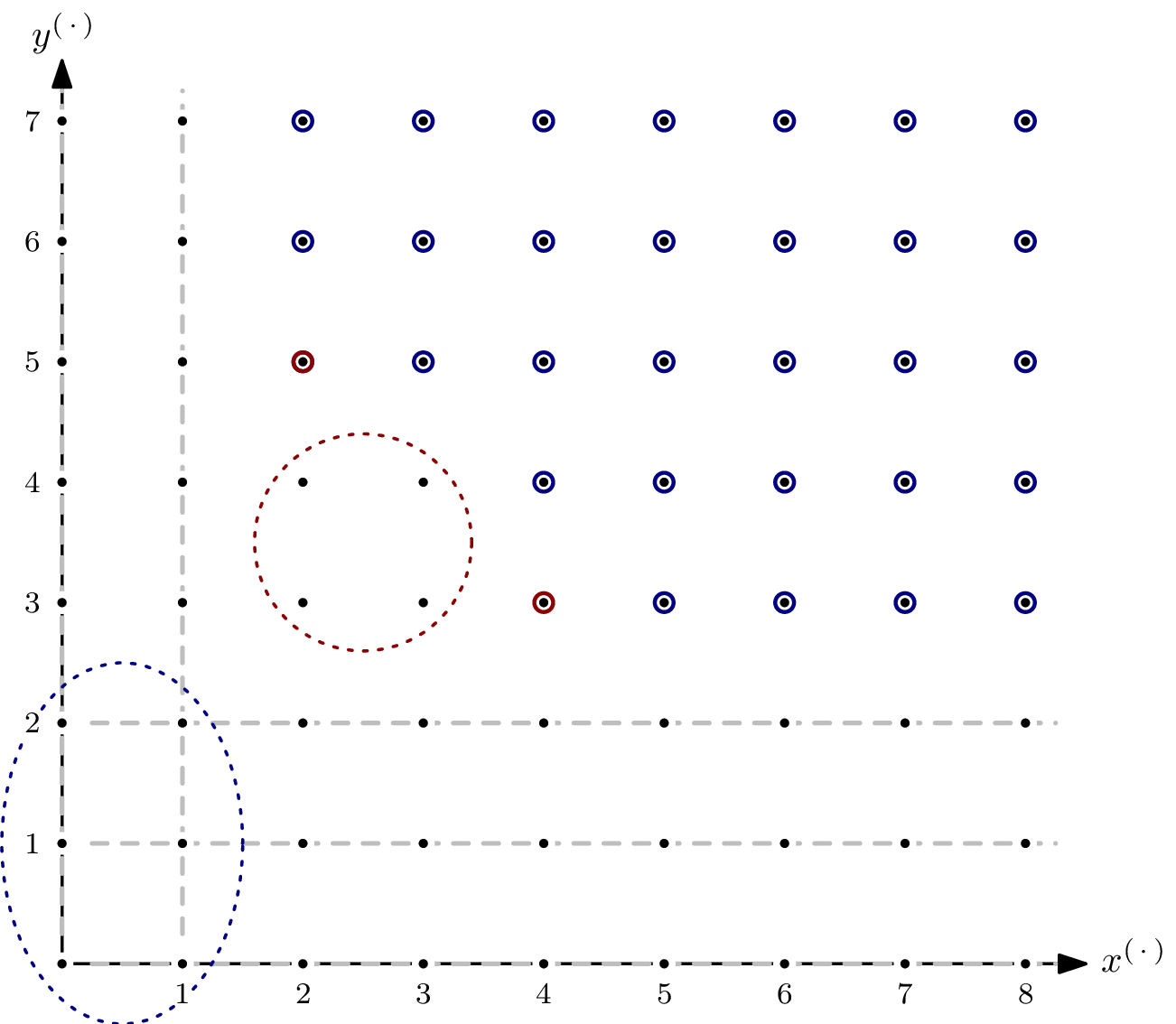

For completeness sake, let's close off the last of the tunnels by adding to Monomial being the lcm of and is the Hilbert regularity-defining corner. The Hilbert regularity for is 5.

Computing the Regularity in sagemath

After having understood the Hilbert regularity, it's time to throw some sagemath at the problem. Below, you can find two approaches. The first uses the staircase, like in the examples above. The second is based on the Hilbert series, which is explained further below.

The nice-to-visualize geometric approach

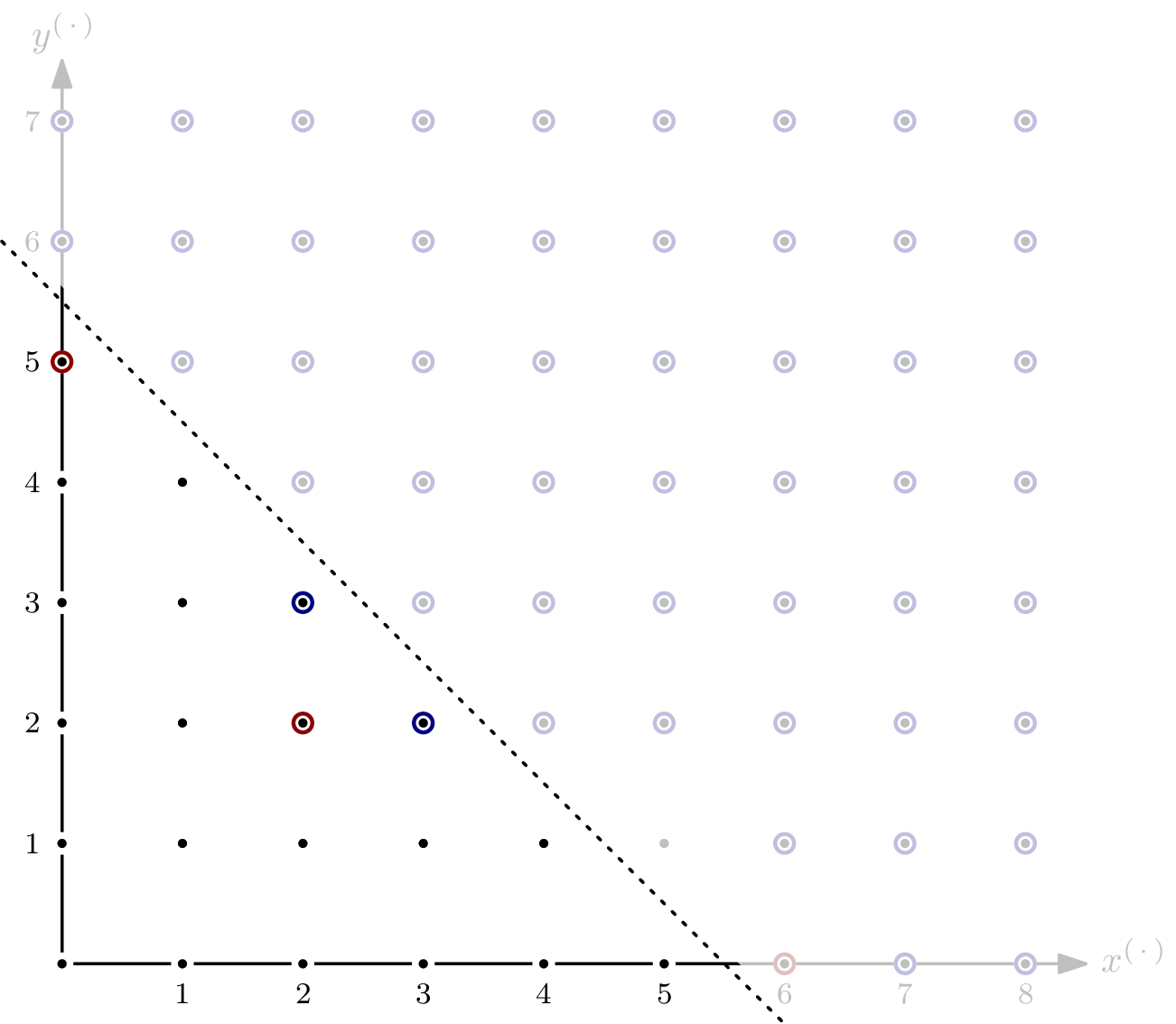

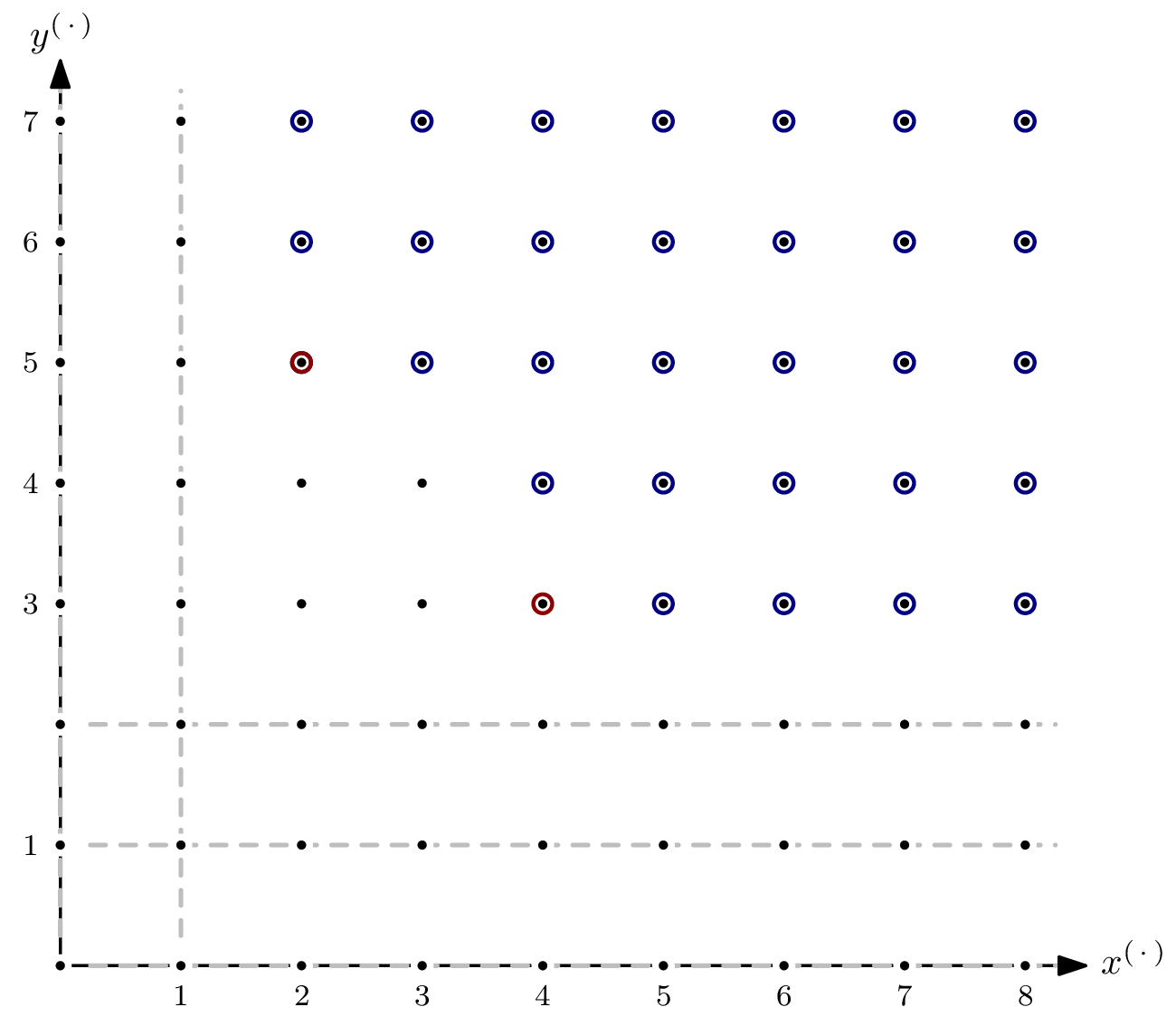

Using the geometric intuitions from above, we can compute the Hilbert regularity by finding all of the staircase's corners. The code below only works for ideals of dimension 0 Technically, the code works for any dimension ≤ 0, i.e., if there are no common solutions to the polynomials in the ideal, it also works. since the polynomial models I do research on are always of that kind.

The code computes all lcm's of subsets of size of the Gröbner basis' leading monomials, which we have determined as the points of interest above. Any such lcm corresponding to a monomial that's flush to one of the 0-planes is ignored as being degenerate – for example, the turquoise cross in below picture. Next, we check if the lcm-monomial is actually a corner of the staircase, by moving one step towards the origin along all axes. If the resulting monomial is in the ideal, it is not in the staircase, and thus not a corner – for example, the red cross in above picture. If, from the moved-to monomial, moving one step along any axis crosses the border of the staircase, we found a corner – for example, both of the blue crosses in above picture, but not the orange cross in the picture below. The distance of the furthest such corner corresponds to the Hilbert regularity.

With those pictures in mind, following the code should be fairly doable:

import combinations

def hilbert_regularity_staircase(I):

'''

Compute the Hilbert regularity of R/I where R = I.ring() and I.dimension() <= 0.

This is done by iterating through all n-tuples of the Gröbner basis' leading monomials,

computing their lcm, then determining if that lcm is actually a corner of the staircase.

The corner that is the furthest from the origin determines the Hilbert regularity.

'''

if I.dimension() > 0:

raise NotImplementedError(f"Ideal must be of dimension 0 or less, but has dim {I.dimension()}.")

gens = I.ring().gens() # all variables

n = len(gens)

xyz = reduce(operator.mul, gens, 1)

gb_lm = [f.lm() for f in I.groebner_basis()]

I_lm = Ideal(gb_lm)

hil_reg = 0

for lms in combinations(gb_lm, n):

m = lcm(lms)

# are we considering a meaningful combination of lm's?

# i.e., does every variable make an appearance in m?

if len(m.degrees()) != n or not all(m.degrees()):

continue

m = m / xyz # 1 step towards origin along all axes

assert I.ring()(m) == m.numerator() # no negative exponents, please

m = I.ring()(m)

# are we in a corner of the staircase?

# i.e., not in the ideal, but moving 1 step along any axis, we end up in the ideal?

if not m in I_lm and all([v*m in I_lm for v in gens]):

hil_reg = max(hil_reg, m.degree())

return hil_reg

The rigorous mathematical approach

The Hilbert regularity can also be computed using the Hilbert series. The Hilbert series is the formal power series of the (projective) Hilbert function: We can either look at the projective Hilbert function, or equivalently subtract two consecutive values of the affine Hilbert function, as I am doing here. The Hilbert series' coefficient of monomial is the number of monomials of degree Unlike before, we only consider equality now! that are in but not in The Hilbert regularity coincides with the degree of the highest-degree consecutive term having positive coefficient.

For example, take from the very first example again, where we had Evaluating the Hilbert function of gives The Hilbert series of is And indeed, the sought-for term has degree which we have seen to be the Hilbert regularity of

Conveniently, sagemath has a method for computing the Hilbert series of an ideal, albeit only for homogeneous ideals. As we have established above, the Hilbert regularity does not change when looking only at the leading monomials of the ideal's Gröbner basis, which is a homogeneous ideal. Thus, finally, we have a catch-all piece of code for computing the Hilbert regularity.

def hilbert_regularity(I):

'''

Compute the Hilbert regularity of R/I using the Hilbert series of R/I.

'''

gb_lm = [f.lm() for f in I.groebner_basis()]

I_lm = Ideal(gb_lm)

hil_ser = I_lm.hilbert_series()

hil_reg = hil_ser.numerator().degree() - hil_ser.denominator().degree()

return hil_reg

Summary

In this post, we have looked at the Hilbert function, the Hilbert polynomial, the Hilbert regularity, and the Hilbert series. For the first two of those, extensive examples have built intuition for what the Hilbert regularity is – and why it is not trivial to compute using this intuition. Instead, the Hilbert series gives us a tool to find the Hilbert regularity fairly easily.

References

- Magali Bardet, Jean-Charles Faugère, Bruno Salvy, and Bo-Yin Yang. Asymptotic behaviour of the degree of regularity of semi-regular polynomial systems. In Proceedings of MEGA, volume 5, 2005.

- Alessio Caminata and Elisa Gorla. Solving multivariate polynomial systems and an invariant from commutative algebra. arXiv preprint arXiv:1706.06319, 2017.

- David A. Cox, John Little, and Donal O’Shea. Ideals, Varieties, and Algorithms: An Introduction to Computational Algebraic Geometry and Commutative Algebra. Springer Science & Business Media, 2013.